project

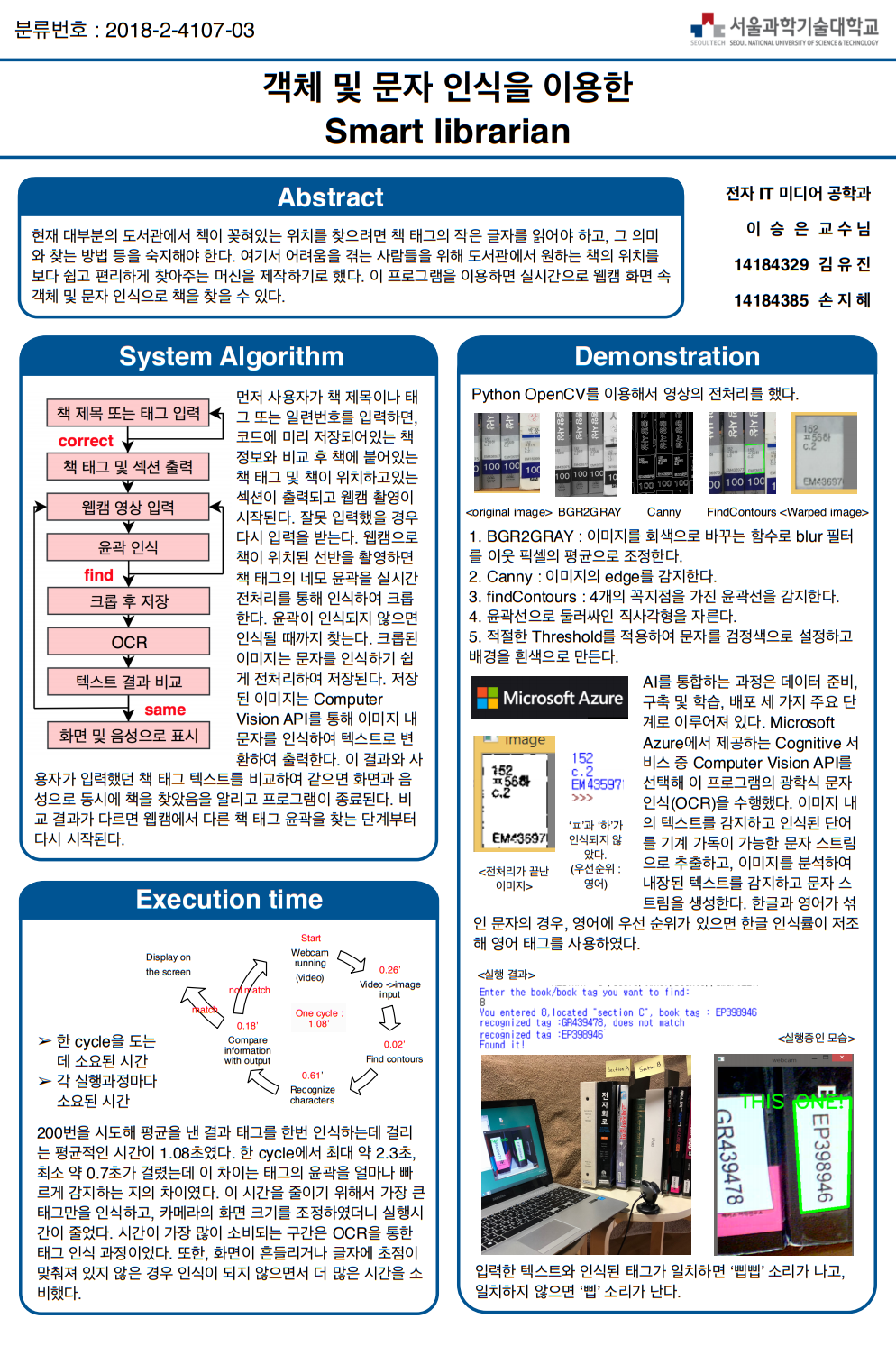

Smart Librarian by Python Optical Character Recognition

yz

2021. 4. 24. 00:48

더보기

from PIL import Image

import http.client, urllib.request, urllib.parse, urllib.error, base64, json

import cv2

import numpy as np

import winsound

def order_points(pts):

rect = np.zeros((4, 2), dtype = "float32")

s = pts.sum(axis = 1)

rect[0] = pts[np.argmin(s)]

rect[2] = pts[np.argmax(s)]

diff = np.diff(pts, axis = 1)

rect[1] = pts[np.argmin(diff)]

rect[3] = pts[np.argmax(diff)]

return rect

def print_text(json_data):

result = json.loads(json_data)

for l in result['regions']:

for w in l['lines']:

line = []

for r in w['words']:

line.append(r['text'])

global t1

t1=(''.join(line))

return

def ocr_project_oxford(headers, params, data):

conn = http.client.HTTPSConnection('westcentralus.api.cognitive.microsoft.com')

conn.request("POST", "/vision/v1.0/ocr?%s" % params, data, headers)

response = conn.getresponse()

data = response.read().decode()

#print (data)

print_text(data)

conn.close()

return

def auto_scan_image_via_webcam():

try:

cap=cv2.VideoCapture(0)

screenCnt=''

except:

print('cannot open camera')

return

while True:

global frame

ret, frame1 = cap.read()

frame=frame1[10:800, 50:350]

if not ret:

print('cannot open camera')

break

k = cv2.waitKey(10)

if k == 27:

break

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

gray = cv2.GaussianBlur(frame, (5, 5), 0)

edged = cv2.Canny(frame, 80, 200)

(_, cnts, _) = cv2.findContours(edged.copy(), cv2.RETR_LIST, cv2.CHAIN_APPROX_SIMPLE)

cnts = sorted(cnts, key = cv2.contourArea, reverse = True)[:5]

# loop over the contours

for c in cnts:

# approximate the contour

peri = cv2.arcLength(c, True)

approx = cv2.approxPolyDP(c, 0.02 * peri, True)

# if our approximated contour has four points, then we

# can assume that we have found our screen

if len(approx) == 4:

contourSize = cv2.contourArea(approx)

camSize = frame.shape[0] * frame.shape[1]

ratio = contourSize / camSize

###print (contourSize)

##print (camSize)

##print (ratio)

screenCnt = []

if ratio > 0.01:

screenCnt=approx

break

if len(screenCnt)==0:

cv2.imshow('webcam', frame)

continue

else:

cv2.drawContours(frame,[screenCnt], -1, (0,255,0), 5)

cv2.imshow('webcam', frame)

rect = order_points(screenCnt.reshape(4, 2))

(topLeft, topRight, bottomRight, bottomLeft) = rect

w1 = abs(bottomRight[0] - bottomLeft[0])

w2 = abs(topRight[0] - topLeft[0])

h1 = abs(topRight[1] - bottomRight[1])

h2 = abs(topLeft[1] - bottomLeft[1])

maxWidth = max([w1, w2])

maxHeight = max([h1, h2])

dst = np.float32([[0,0], [maxWidth-1,0],

[maxWidth-1,maxHeight-1], [0,maxHeight-1]])

M = cv2.getPerspectiveTransform(rect, dst)

warped = cv2.warpPerspective(frame, M, (maxWidth, maxHeight))

warped=cv2.cvtColor(warped,cv2.COLOR_BGR2GRAY)

warped = cv2.adaptiveThreshold(warped, 255, cv2.ADAPTIVE_THRESH_MEAN_C, cv2.THRESH_BINARY, 21, 10)

break

cap.release()

cv2.imwrite('scannedbooktag.png', warped)

headers = {

# Request headers

'Content-Type': 'application/octet-stream',

'Ocp-Apim-Subscription-Key': '8b19b33ce85d4fda96c9ffd825105795',

}

params = urllib.parse.urlencode({

# Request parameters

'language': 'en',

'detectOrientation ': 'true',

})

data = open('scannedbooktag.png', 'rb').read()

try:

image_file = 'scannedbooktag.png'

ocr_project_oxford(headers, params, data)

except Exception as e:

print(e)

if __name__ == '__main__':

frame=''

while True :

print ("Enter the book/book tag you want to find:")

t2=input('')

##########sectionA #########

if t2== ("1") or t2 == ("Control Systems Engineering") or t2 == ('GR439478') or t2==('gr439478'):

a='GR439478'

print ("You entered "+t2+',It located "section A", book tag : '+a)

break

elif t2== ("2") or t2 == ('제어시스템공학') or t2 == ('NA793292') or t2 ==('na793292'):

a='NA793292'

print ("You entered "+t2+',located "section A", book tag : '+a)

break

elif t2== ("3") or t2 == ('이동통신공학') or t2 == ('ZD276468') or t2==('zd276468'):

a='ZD276468'

print ("You entered "+t2+',located "section A", book tag : '+a)

break

elif t2== ("4") or t2 == ('고체전자공학') or t2 == ('UZ637949') or t2 ==('uz637949'):

a='UZ637949'

print ("You entered "+t2+',located "section A", book tag : '+a)

break

#############sectionB##########

elif t2== ("5") or t2== ("전자회로") or t2 == ('FU462694') or t2==('fu462694'):

a='FU462694'

print ("You entered "+t2+',located "section B", book tag : '+a)

break

########sectionC#########

elif t2== ("6") or t2== ("선형대수") or t2 == ('LR336246') or t2==('lr336246'):

a='LR336246'

print ("You entered "+t2+',located "section C", book tag : '+a)

break

elif t2== ("7") or t2== ("이공계대학수학") or t2 == ('EP398946') or t2 ==('ep398946'):

a='EP398946'

print ("You entered "+t2+',located "section C", book tag : '+a)

break

elif t2== ("8") or t2== ("수학의 정석") or t2 == ('AQ682989') or t2==('aq682989'):

a='AQ682989'

print ("You entered "+t2+',located "section C", book tag : '+a)

break

else:

print('no information')

while True :

t1=''

auto_scan_image_via_webcam()

if t1==a :

print ("recognized tag :"+t1)

break

#빈칸 입력되면 아주짧게 삡 잘못된 인식이면 삐입 맞으면 삡삡

elif t1=='':

print ("Please focus")

duration = 400

freq=150

winsound.Beep(freq, duration)

else:

print ("recognized tag :" +t1+ ", does not match")

duration = 400

freq=440

winsound.Beep(freq, duration)

print ("Found it!")

cv2.putText(frame, 'THIS ONE!', (50, 100), cv2.FONT_HERSHEY_SIMPLEX, 1.5, (0, 255, 0), 4)

cv2.imshow("webcam", frame)

duration = 400

freq=800

winsound.Beep(freq, duration)

winsound.Beep(freq, duration)

cv2.waitKey(0)

cv2.destroyAllWindows()

cv2.waitKey(1)professor : seungeun lee

member : yoojin kim, jihye son

LIST